Project Oriented Problem Based Learning (POPBL 1) – Advanced Attack Detection

This section describes the first POPBL project at Mondragon University for the Master's in Data Analysis, Cybersecurity, and Cloud Computing. In this case, the topic was proposed by the teaching staff.

The project combines the disciplines of data analysis, cybersecurity, and cloud computing into a comprehensive collaborative effort. The general goal was to build a cloud infrastructure (AWS) with microservices simulating an industrial manufacturing environment, along with an API Gateway (HAProxy and Consul for Discovery) to handle HTTP requests to the microservices.

At the same time, the installation of honeypots (Cowrie and Dionaea) proved valuable for detecting and collecting information on various attacks (Elastic stack). With that data, we were able to create an anomaly detection model (clustering or classification).

We eventually created a dashboard (Kibana) to gain deeper insights into the profiles of our attackers.

I’d like to highlight several tools that might otherwise go unnoticed since they don’t directly affect the project’s core functionality. These include Vault for secure secret management, Consul for service discovery across microservices, and CloudFormation for infrastructure provisioning on AWS.

If you want to learn more about the project’s development and structure, including its work plan and organization, you can explore the details here (available in English only).

POPBL 2 – ITAPP (Text Generator)

This section describes the second and final POPBL project at Mondragon University for the Master's in Data Analysis, Cybersecurity, and Cloud Computing. In this case, the topic was chosen by the students.

I’d like to highlight the social context in which this project took place, as it was during the peak of the COVID-19 lockdown. Even so, the group met virtually and organized themselves to carry out the project and overcome the challenges that arose.The project was developed in the context of the 2020 U.S. presidential elections, where ITAPP (a fictional company) offered a tool that automatically generated personalized texts simulating the styles of candidates Donald Trump and Joe Biden.

This enabled more efficient use of social media, despite the limited time available during the campaign. The application integrated machine learning, automated version control, security measures (such as fuzzing with OWASP Zap), and systems for data transport and storage (ElasticSearch, Hadoop), aiming to make the model's output easily accessible.

The solution’s design was based on data gathered from Twitter, Tumblr APIs, and a dataset of political tweets. A model (built with Keras, distributed using Spark) was developed to generate coherent tweets from topics entered by users in the ITAPP application. The system continuously captured and cleaned new data to improve the model (Spark, Apache Nifi), whose results were published on the official POPBL Twitter account.

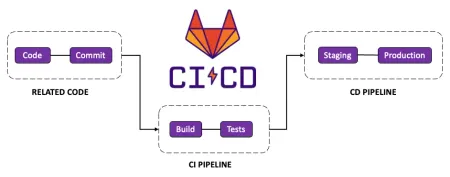

A CI/CD methodology (Kubernetes, GitLab CI/CD) was applied, including model validation stages, static code analysis, and uninterrupted deployment using a Blue-Green architecture. All development followed an agile methodology.

If you want to learn more about the project’s development and structure, including its work plan and organization, you can explore the details here. (Available in English only).

GitLab CI/CD

During this hands-on assignment for the Master's in Data Analysis, Cybersecurity, and Cloud Computing, I developed a complete continuous integration and delivery (CI/CD) solution for a microservices-based system using Docker containers. The entire project followed an Agile methodology with short iterations, incremental deliveries, and constant validation through automated tests.

The goal was to automate the full testing, packaging, and deployment cycle of the "Orders" system from scratch. To achieve this, I designed multiple levels of testing (unit, integration, system, and smoke), developed a new feature using TDD (user deletion), and built a GitLab CI/CD pipeline split into three stages, capable of generating versioned containers ready for production.

One of the key takeaways was understanding the value of testing in stages, how to organize a multi-stage environment using different docker-compose files, and how to ensure that production deployments do not break existing functionality. I also had the opportunity to work with custom runners in GitLab (a course requirement) and to carry out a manual deployment on AWS using EC2 instances. Overall, this project allowed me to touch every piece of the continuous delivery lifecycle.

That said, I pushed the project to an almost atomic level, and that same intensity also revealed its limits. In an academic setting, this was enriching; but in a professional context—as I experienced at ISEA—this level of control is rarely necessary (or at least, I haven’t encountered the need). Today, platforms like Vercel or Render allow us to safely and efficiently offload much of this process. Still, knowing how these tools work under the hood seems essential to me—not just for when you want to go deeper, but also to understand their limitations.

This project has been a cornerstone in solidifying my knowledge of CI/CD, pipelines, automated testing, and deployment best practices. It gave me a strong foundation—and the insight to know when to go all-in… and when it doesn’t really make sense.

Kubernetes

In this second hands-on project for the Master's in Data Analysis, Cybersecurity, and Cloud Computing, I delved into managing orchestrated deployment environments using Kubernetes, with the goal of automating the entire workflow of a microservices-based application.

Building on the previous CI/CD project, this time the focus shifted to designing robust environments (development, pre-production, demo, and production), implementing a canary-style gradual deployment strategy, and using Kubernetes as the core of the infrastructure. All development was organized through issues and milestones on GitLab, allowing me to structure tasks with a realistic and professional approach.

During the project, I worked with key tools and concepts such as:

- ConfigMaps and Secrets for configuration and credentials.

- Deployments and Pods to launch controlled versions.

- NodePorts and ClusterIP for exposure and connectivity.

- Branch-based CI/CD pipeline (dev, canary, master) to automate deployments based on code state.

I prepared a system in which only code tested and validated by users (via the canary environment) would reach production, thus ensuring reliability at every step. Automating cluster provisioning, configuration loading, and container deployment—both in Minikube and Google Cloud—was a key part of the process.

This project also helped me clearly understand fundamental concepts of modern application architecture, such as high availability and horizontal and vertical scalability. Seeing how Kubernetes enables automatic scaling based on load, or how it ensures fault tolerance through pod replication, gave me valuable insight into what lies beneath any system deemed “production-ready.”

Beyond the technical, this practice helped consolidate my knowledge of Kubernetes core units and gave me perspective on balancing control and efficiency. As I reflected in the previous project, diving deep into every layer can be very helpful for learning—but not always practical in real-world environments.

In experiences like the one I had at ISEA, I’ve found that in many cases, it’s more efficient to delegate parts of this infrastructure to platforms like Vercel, which abstract the complexity without sacrificing reliability. Even so, knowing how these systems work internally, down to their smallest units, has given me a solid foundation to decide when to go deeper—and when it's better to abstract.

Music Generator with Keras

Music has always been an important part of my life. I've played the piano since I was a child, and although my professional career revolves around software development and artificial intelligence, in this project I wanted to explore a crossover between both passions.

The challenge was to develop an automatic music generator using recurrent neural networks—specifically an LSTM (Long Short-Term Memory) architecture with Keras—trained on a dataset of compositions by Hans Zimmer in MIDI format (some of which I played and recorded myself using GarageBand for Mac).

While the goal was to generate realistic melodies, the real technical challenge was to get the model to learn meaningful musical patterns rather than just repeating random sequences. Preprocessing was key: MIDI files had to be converted into vector representations and the notes had to be properly encoded.

During training, I fine-tuned the hyperparameters and validated the model by generating test fragments, listening to each one with both technical and musical criteria. The result isn’t a perfect symphony, but it is a demonstration of how AI can grasp—even partially—the structure of a language as abstract and emotional as music.

To close this section on a more personal note, I’ve included a short video of me playing the piano. It’s not about showcasing musical skill, but simply about sharing another side of myself: someone who, in addition to enjoying technology, also finds in music a way to relax and have fun.

If you’d like to learn more about the project’s development, you can explore the details here (available in English only).

Gogoa

In modern software development, tools like OpenAI’s ChatGPT and GitHub Copilot have become almost indispensable. Far from being a passing trend, they’ve become true allies that boost our productivity, extend our capabilities, and most importantly, allow us to focus on the creative and high-level aspects of each project. Adopting these tools isn’t just about efficiency—it’s about evolving alongside the technological environment we live in.

One of the projects that most demanded this kind of technological vision was a commission I received to work with the writings of Don José María Arizmendiarrieta—a key figure in Euskadi’s recent history and founder of the cooperatives that today form the Mondragón Corporation. His thinking, deeply humanistic and committed to social transformation through cooperativism, is captured in an extensive collection of handwritten notes that, until now, had not been categorized or made available by theme or in digital form.

The main goal of the project is to make this collection “available” through a web application, currently under development, that allows users to browse the notes by theme and make his legacy accessible to researchers, students, and anyone interested. To achieve this, AI techniques have been applied—both traditional ones similar to those used in projects like GAIA and modern ones, such as retrieval-augmented generation (RAG) systems integrated into ChatGPT.

During the development of the classification models—the technical core of the project—we encountered several significant challenges. One of them was the clear imbalance between categories: naturally, José María did not dedicate the same number of notes to each theme. This uneven distribution, combined with a small initial dataset (500 notes classified by the Association of Friends of Arizmendiarrieta), required a sophisticated approach. As a result, various AI techniques were applied to balance the training dataset, thereby improving the generalization capacity and robustness of the resulting models.

Currently, a specialized company from Valencia is working on manually transcribing the handwritten notes, and we are awaiting that material in order to apply the trained models and continue with the automated classification and web publication process.

Elkarbide

Elkarbide is an established social network focused on the promotion of content related to innovation and entrepreneurship. Through the platform, users can publish ideas, share experiences, and connect with others to collaboratively develop projects. It also serves as an access point for useful information about public grants, both at the provincial and national levels, making it a practical and valuable tool for a wide range of users.

However, like many digital products that have been around for years, its interface has become outdated. The user experience no longer meets current standards in terms of accessibility, design, or efficiency. For this reason, I was tasked with leading a complete redesign of the platform, with the goal of fully modernizing it while preserving its core essence and functionality.

So far, a new visual proposal has been developed in Figma. The next step is to evaluate which parts of the previous system can be reused and which ones need to be rethought from scratch. This analysis is one of the most critical phases of any project: understanding both the starting point and the desired outcome is essential for defining a realistic strategy—both in software development and beyond.

In addition to redesigning the platform, this project is also an opportunity to learn and experiment with new technologies. The idea is to take advantage of the context to explore current tools and approaches like React Server Components, which can enhance the performance and scalability of modern web applications.

Ultimately, Elkarbide is a project that combines redesign, technical analysis, and continuous improvement, with the added benefit of including a training and research component that makes it even more enriching.

Portfolio

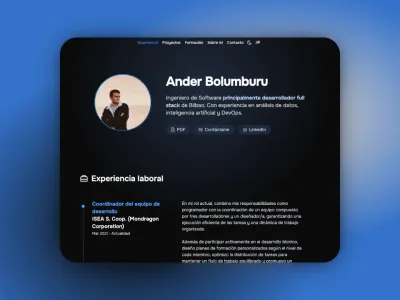

This portfolio is not just a showcase—it’s also a project I’ve developed with technical criteria and the intention that it will accompany me throughout my professional journey.

I built it using Astro, a framework I discovered specifically for this project and which I’ve found to be an excellent tool for creating fast, accessible, and easy-to-maintain websites. Thanks to its component-based approach and static site generation, I’ve managed to create a very lightweight site without sacrificing structure or modularity. It’s a good example of well-designed software: purpose-driven and free of unnecessary complexity.

Developing the portfolio has allowed me to put into practice many of the skills I’ve acquired over time: clear code organization, responsive design, smooth navigation, and transition management. Everything is deployed on Vercel, which lets me take advantage of its content delivery network (CDN) for optimal performance without any hassle.

Beyond being my calling card, this project is designed to be scalable and easily modifiable. Thanks to its structure, I can add new projects, experiences, or sections without touching more than necessary. Unless I feel like redesigning it just for aesthetics (which could totally happen 😄), there’s really no need to rebuild it from scratch.

Currently

I’m currently very focused on optimizing and refining how I develop software—not so much from the perspective of adding more features, but rather improving the performance, load behavior, and scalability of the applications I build. Although I’m already quite comfortable with many tools, I’ve identified areas for improvement, especially in how resources are loaded and how code is delivered to the client.

This process is pushing me to step out of my comfort zone and dive deeper into more modern technologies like React Server Components and the new Next.js App Router, which I’m currently exploring in depth. In doing so, I’m seeing first-hand the benefits of this approach: from reducing the amount of JavaScript sent to the browser to lowering client network dependency, resulting in a faster and more robust experience.

At the same time, I’m dedicating part of my free time to researching how to migrate applications built with the Pages Router (based on Client Components) to the new Server Component-based system. It’s a technically rich exercise that’s helping me solidify what I’ve learned and identify reusable patterns for real-world migrations.

On a more personal level, I’ve gotten back into swimming and going to the gym with more discipline. I’ve always been involved in swimming, but during university and the pandemic, I had to pause my training. For about a year now, I’ve returned to regular workouts with clear goals. At the moment, I’m preparing to participate in the classic 100x100 held annually in Durango—a demanding but highly motivating challenge. Meanwhile, the gym has helped me maintain a steady routine and develop discipline and commitment—qualities that definitely carry over into my work as well.